Having No Fun with Rubygems, Systemd, Docker and Networking

Image credit: DALL-E

Image credit: DALL-ERecently I faced a rather strange issue with networking and docker: I was neither

able to install Rubygems in a container nor access any other services on external

IP addresses. In this article I’m going to describe how I solved this issue and what

I learned about systemd-networkd.

Setup

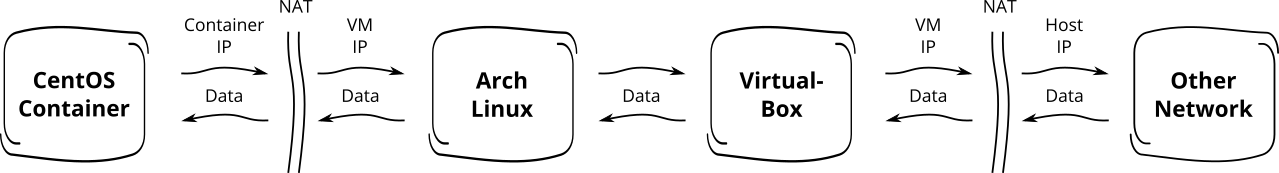

I use VirtualBox to run Virtual Machines (VM). The VM I normally use to do my

daily business is powered by Arch Linux – a rolling release Linux distribution using systemd

as PID 1. Within that VM I have the latest version of Docker installed (version

1.8). All network traffic from the VM to the “outer world” uses the IP address

of the virtualization host. The VM IP address is “translated” into the one of

the host using Network Address Translation

(NAT). All docker containers are connected to the

default private docker network which is also a NAT-network. systemd-networkd

manages the network interfaces of my VM. Fig 1. gives you an overview of my

local setup.

Issue

Troubles started when I tried to install some Rubygems in a docker container, I setup to speed up development of a “Ruby On Rails”-application.

First, I saw DNS SRV-requests I’ve never seen before with Rubygems. It turned out, that those requests are “regular” requests made by Rubygems. That functionality was introduced to find the server for the Rubygems-network-API while making it easier to migrate to a different server in the future [1].

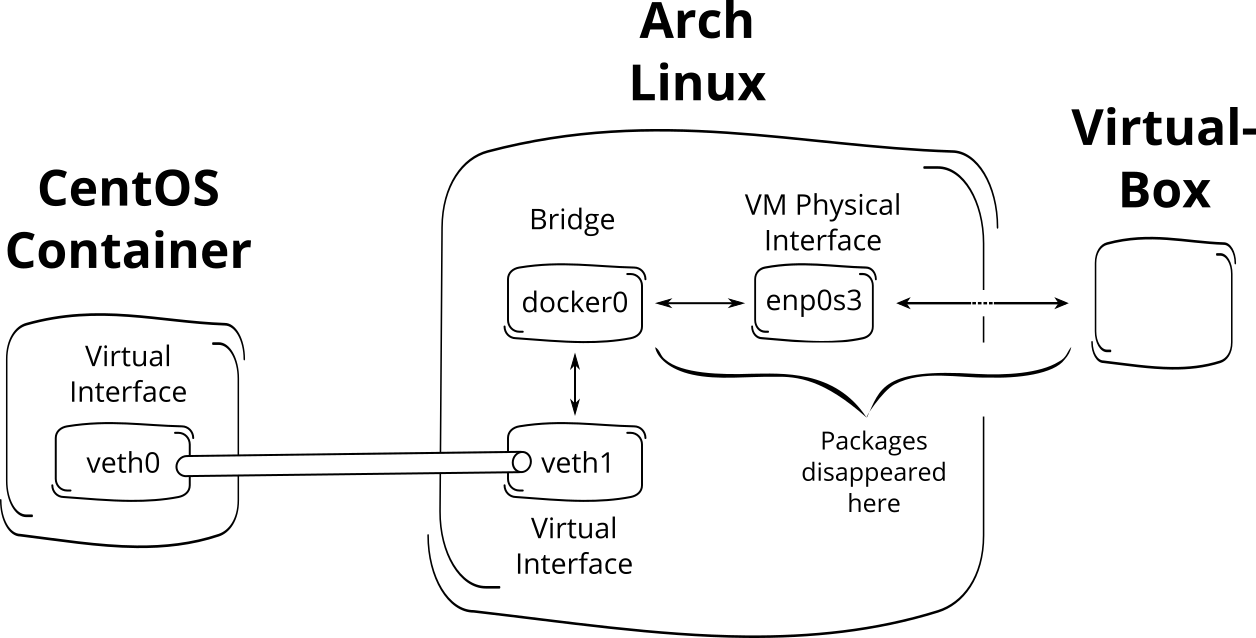

The network trace gathered using wireshark showed, that those requests

Rubygems sent, were answered by the name server, but the response was not

forwarded to the container. They simply “disappeared”. See Fig. 2 for the

problematic section of my setup.

The VM has a “physical” interface enp0s3 which is connected to the bridge

docker0 and to the “outer world” via “VirtualBox”. This bridge is setup by docker itself.

All containers are connected to that bridge – each container with its own pair

of veth-interfaces. Those veth-interfaces are like “linked twins” mirroring

packages between different network name spaces. A quite good introduction to

veth and network name spaces can be found

here:

Analysis

Forwarding #1

My first guess was, that there is something wrong with the forwarding of my VM, but when I checked the settings, everything looked fine.

% sudo sysctl -a | grep forwarding

# [...]

# => net.ipv4.conf.all.forwarding = 1

# [...]

DNS

That’s why I started a CentOS-container with ruby installed using the following command in a terminal.

# Pull image

% docker pull feduxorg/centos-ruby

# Run container

% docker run --rm -it --name centos1 feduxorg/centos-ruby bash

After the container was up, I run the gem command again and tried to install

bundler. Unfortunately that was still failing.

[root@1efaeac0e16a /]$ gem install bundler

# => ERROR: Could not find a valid gem 'bundler' (>= 0), here is why:

# => Unable to download data from https://rubygems.org/ - no

# => such name (https://rubygems.org/specs.4.8.gz)

Following that, I googled for help and found this

issue on Github.com. With that information, I installed the host-command into

the CentOS-container to emulate the requests sent by Rubygems. But that didn’t

help me to find the reason for the problems.

[root@1efaeac0e16a /]$ host -t srv _rubygems._tcp.rubygems.org

# => ;; connection timed out; trying next origin

# => ;; connection timed out; no servers could be reached

Network

After some discussion with a colleague of mine I tried to check if there is a general problem with my network setup. And yes, even pinging some external IP addresses was not successful. Damn ….

[root@1efaeac0e16a /]$ ping 8.8.8.8

# => PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

^C

# => --- 8.8.8.8 ping statistics ---

# => 5 packets transmitted, 0 received, 100% packet loss, time 4007ms

Firewall

Next I checked, if docker setup all firewall rules correctly. First I checked the “filter”-rule set: All chains were set to “ACCEPT”, plus some more rules. So everything was OK here.

% sudo iptables -nvL

# [...]

# => Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

# => pkts bytes target prot opt in out source destination

# => 0 0 DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0

# => 0 0 ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

# => 5 1052 ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0

# => 0 0 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0

# [...]

But… Those counters looked strange. Although the counter for the outgoing packages had been incremented, the counter for the incoming packages was still “0”.

# [...]

# => 0 0 DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0

# => 0 0 ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

# [...]

Network Address Translation

Then I also checked the “NAT”-rule set: Again, all chains were set to

“ACCEPT”, the MASQUERADE-rule existed and had some matches.

% sudo iptables -nvL -t nat

# [...]

# => Chain POSTROUTING (policy ACCEPT 15 packets, 972 bytes)

# => pkts bytes target prot opt in out source destination

# => 9 619 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

# [...]

Mmmh … I checked the iptables chains and the output of sysctl several times again, but I

didn’t have a clue what was wrong. I googled again and came across

conntrack. This tool is a commandline interface for “netfilter connection

tracking”. I installed the package “conntrack-tools” within my Arch Linux VM

and started the command to track the translation of IP addresses.

% sudo conntrack -E -j

# => [NEW] icmp 1 30 src=172.17.0.1 dst=8.8.8.8 type=8 code=0 id=54 [UNREPLIED] src=8.8.8.8 dst=10.0.2.15 type=0 code=0 id=54

# => [UPDATE] icmp 1 30 src=172.17.0.1 dst=8.8.8.8 type=8 code=0 id=54 src=8.8.8.8 dst=10.0.2.15 type=0 code=0 id=54

At least the masquerading rule of iptables seemed to work: The container IP

address 172.17.0.1 was rewritten to the VM IP address 10.0.2.15 –

which was given to the VM by the DHCP-server of “VirtualBox” – and vice

versa – otherwise the [UPDATE] line would not be there.

Comparing behaviour with other VM

Then I tried to reproduce the issue on a different virtual machine: I setup a new VM with Arch Linux on the same host where the problem occurred. Fortunately I was no able to reproduce the problem with the new VM. At least I knew, that it was no problem with my whole setup, but only with the one particular VM.

Forwarding #2

After that, I checked the forwarding settings once again. It turns out, that

the IP forwarding was deactivated only for the external interface enp0s3. I

just had overseen that line. Argh …

% sudo sysctl -a | grep forwarding

# => net.ipv4.conf.all.forwarding = 1

# => net.ipv4.conf.all.mc_forwarding = 0

# => net.ipv4.conf.default.forwarding = 1

# => net.ipv4.conf.default.mc_forwarding = 0

# => net.ipv4.conf.docker0.forwarding = 1

# => net.ipv4.conf.docker0.mc_forwarding = 0

#####

# => net.ipv4.conf.enp0s3.forwarding = 0

#####

# => net.ipv4.conf.enp0s3.mc_forwarding = 0

# => net.ipv4.conf.lo.forwarding = 1

# => net.ipv4.conf.lo.mc_forwarding = 0

# => net.ipv4.conf.veth25f2de1.forwarding = 1

# => net.ipv4.conf.veth25f2de1.mc_forwarding = 0

I wondered why on earth this setting had been disabled for this particular

interface. But then it came into my mind: I decided to use systemd-networkd

in the virtual machine to manage my network interfaces. A choice I have never

regret so far. So I checked their

manual for the network-units and found this section.

Note: unless this option is turned on, or set to "kernel", no IP forwarding is done on this interface, even if this is globally turned on in the kernel, with the net.ipv4.ip_forward, net.ipv4.conf.all.forwarding, and net.ipv6.conf.all.forwarding sysctl options. [systemd.network](http://www.freedesktop.org/software/systemd/man/systemd.network.html#IPForward=)-manual

Solution

Activating the forwarding for that interface made the problem disappear.

% sysctl net.ipv4.conf.enp0s3.forwarding=1

# => net.ipv4.conf.enp0s3.forwarding=1

% sudo sysctl -a | grep forwarding

# => net.ipv4.conf.all.forwarding = 1

# [...]

# => net.ipv4.conf.docker0.forwarding = 1

# => net.ipv4.conf.enp0s3.forwarding = 1

# => net.ipv4.conf.veth25f2de1.forwarding = 1

# [...]

When I sent a ping again, it was successful.

[root@1efaeac0e16a /]$ ping 8.8.8.8

# => PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

# => 64 bytes from 8.8.8.8: icmp_seq=1 ttl=242 time=4.22 ms

# => 64 bytes from 8.8.8.8: icmp_seq=2 ttl=242 time=2.90 ms

# => 64 bytes from 8.8.8.8: icmp_seq=3 ttl=242 time=3.09 ms

^C

# => --- 8.8.8.8 ping statistics ---

# => 3 packets transmitted, 3 received, 0% packet loss, time 2004ms

# => rtt min/avg/max/mdev = 2.902/3.409/4.228/0.584 ms

To make that change permanent I also changed the network-unit for the network

interface enp0s3 and restarted the system.

[Match]

# Match all network interfaces starting with "en"

Name=en*

[Network]

# [...]

# Activate IP forwarding for the interfaces

IPForward=true

The forwarding was still correct for all relevant interfaces.

% sudo sysctl -a | grep forwarding

# => net.ipv4.conf.all.forwarding = 1

# [...]

# => net.ipv4.conf.docker0.forwarding = 1

# => net.ipv4.conf.enp0s3.forwarding = 1

# => net.ipv4.conf.veth25f2de1.forwarding = 1

# [...]

When I started another ping, it was still successful.

[root@1efaeac0e16a /]$ ping 8.8.8.8

# => PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

# => 64 bytes from 8.8.8.8: icmp_seq=1 ttl=242 time=8.63 ms

# => 64 bytes from 8.8.8.8: icmp_seq=2 ttl=242 time=8.14 ms

# => 64 bytes from 8.8.8.8: icmp_seq=3 ttl=242 time=8.15 ms

^C

# => --- 8.8.8.8 ping statistics ---

# => 3 packets transmitted, 3 received, 0% packet loss, time 2003ms

# => rtt min/avg/max/mdev = 8.144/8.312/8.633/0.227 ms

And I could install bundler eventually.

[root@1efaeac0e16a /]$ gem install bundler

# => Successfully installed bundler-1.10.6

# => 1 gem installed

Conclusion

I was quite happy that my problem was not a general problem and I could fix it

myself. But: Please be careful with Docker, systemd-networkd, Linux and IP

forwarding and make sure, you activate it for ALL relevant interfaces and make

yourself comfortable with all components involved in your network setup. Maybe

it would even make sense, that the Docker-guys implement some kind of

check-command to make sure everything is setup for docker as required.

Thanks for reading.

Updates

2015-11-18

As of “systemd” v228 the problem should not occur any more.

Behaviour of networkd's IPForward= option changed (again). It will no longer maintain a per-interface setting, but propagate one way from interfaces where this is enabled to the global kernel setting. The global setting will be enabled when requested by a network that is set up, but never be disabled again. This change was made to make sure IPv4 and IPv6 behaviour regarding packet forwarding is similar (as the Linux IPv6 stack does not support per-interface control of this setting) and to minimize surprises. [ANNOUNCEMENT "systemd" v228](http://lists.freedesktop.org/archives/systemd-devel/2015-November/035059.html)